Integreat has been selected as one of 10 finalists at Google.org’s impact challenge in the category “lighthouse projects”! This secures 250,000€ of funding that we will use to expand on the job market capabilities.

No category

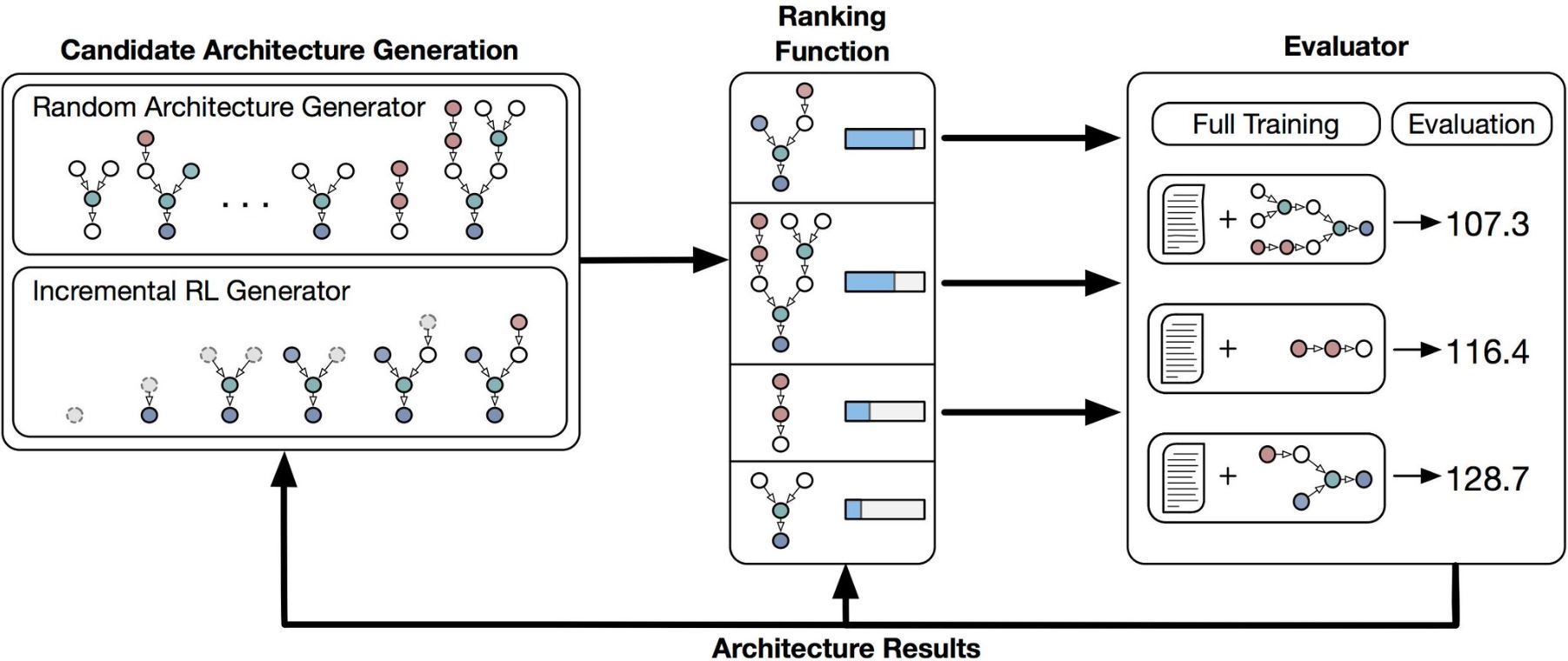

Searching for non-intuitive architectures

Summer Internship work is out in ICLR! Automatic architecture search finds non-intuitive (at least to me) architecture including sine curves and division.

I’m really glad to have worked with a fantastic team at Salesforce Research, most closely with Stephen Merity and Richard Socher.

Blog: https://einstein.ai/research/domain-specific-language-for-automated-rnn-architecture-search

Paper + Reviews: https://openreview.net/forum?id=SkOb1Fl0Z

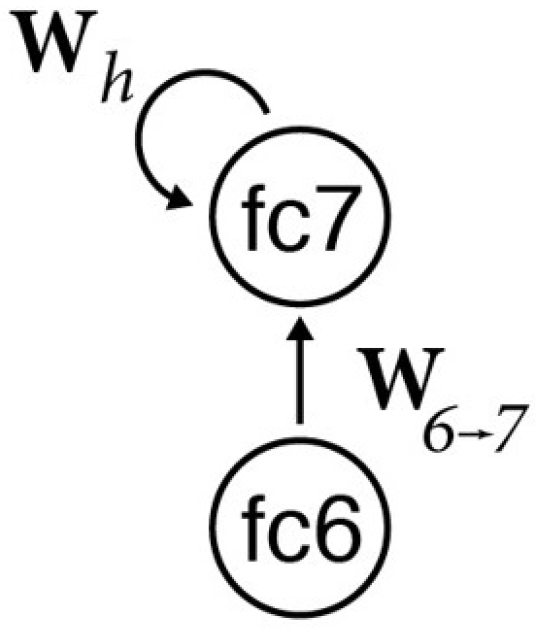

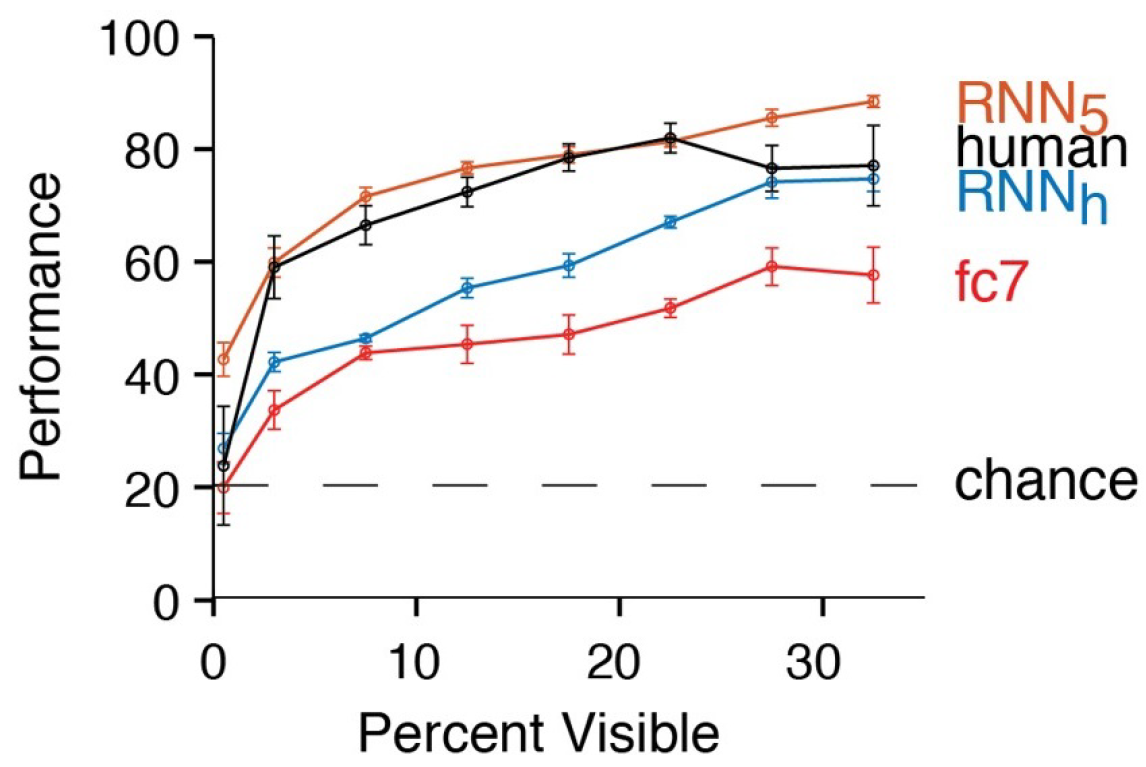

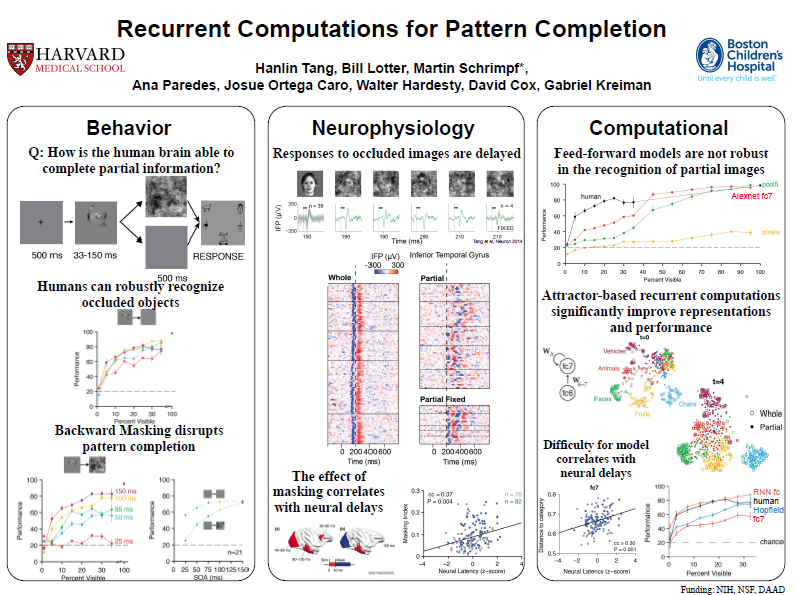

Master’s Thesis: Brain-inspired Recurrent Neural Algorithms for Advanced Object Recognition

It’s done! I finished my Master’s Thesis which focused on the idea and implementation of recurrent neural networks in computer vision, inspired by findings in neuroscience. The two main applications of this technique shown here are the recognition of partially occluded objects and the integration of context cues.

Here’s the link: Brain-inspired Recurrent Neural Algorithms for Advanced Object Recognition – Martin Schrimpf

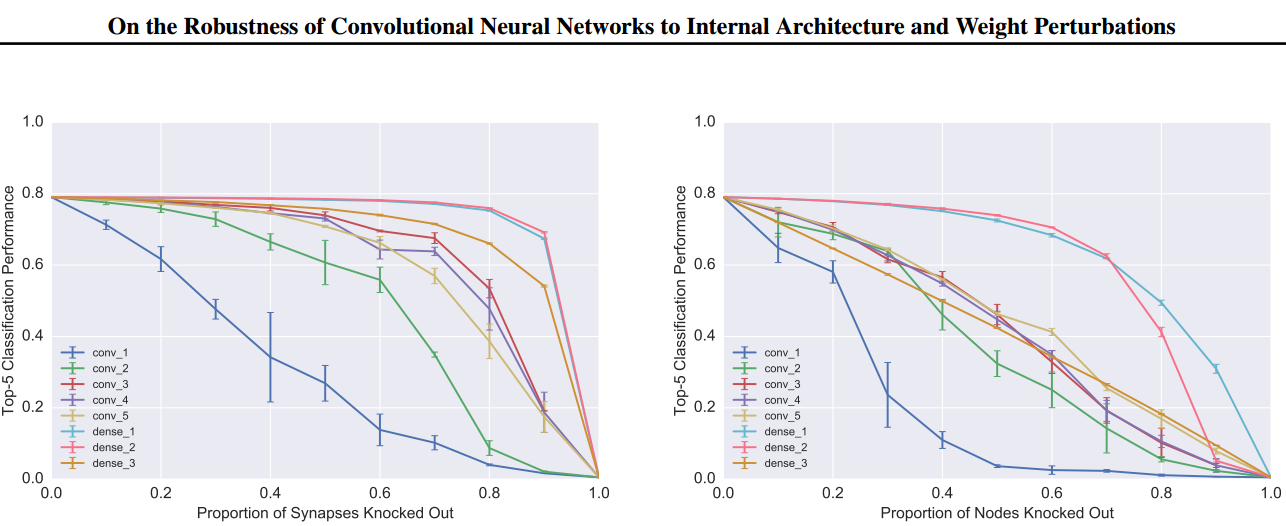

On the robustness of neural networks

There is a new project we are beginning to look into which analyzes today’s neural networks in terms of stability and plasticity.

More explicitly, we evaluate how well these networks can cope with changes to their weights and how well they can adapt to new information. Some preliminary results suggest that if weights in lower layers are perturbed, this has a more severe effect on performance than if higher layers are perturbed. This has a nice correlation to neuroscience where it is assumed that our hierarchically lower cortical layers in the visual cortex remain rather fixed over the years.

Update: we just uploaded a version to arXiv (https://arxiv.org/abs/1703.08245) which is currently under review at ICML.

NIPS Brains&Bits Poster

Just presented our work on Recurrent Computations for Pattern Completion at the NIPS 2016 Brains & Bits Workshop!

Here’s the poster that I presented.

It was an awesome conference, lots of new work and amazing individuals.

Here’s a really short summary, but I highly recommend going through the papers and talks:

- unsupervised learning and GANs are hot

- learning to learn is becoming hot

- new threshold for deep: 1202 layers

TensorFlow seminar paper on arXiv

After some requests, I have uploaded my (really short) analysis of Google’s TensorFlow to arXiv: https://arxiv.org/abs/1611.08903.

It is really just a small seminar paper, the main finding is that while using any Machine Learning framework is generally a good idea, TensorFlow has a really good chance of sticking around due to its already widespread usage within Google and research coupled with a growing community.

Integration Prize

We just won the Integration Prize of the Government of Swabia with Integreat!

Scalable Database Concurrency Control using Transactional Memory

Although it’s been a while, I thought I’d upload my Bachelor’s Thesis for others to read: Scalable Database Concurrency Control using Transactional Memory.pdf.

The work consists of two parts:

Part 1 analyzes the constraints of Hardware Transactional Memory (HTM) and identifies data structures that profit most of this technique.

Part 2 attempts different implementations of HTM in MySQL’s InnoDB storage component and evaluates the results.