We previously found GPT (2) to be a strong model of the human language system (pnas.org/doi/10.1073/pn).

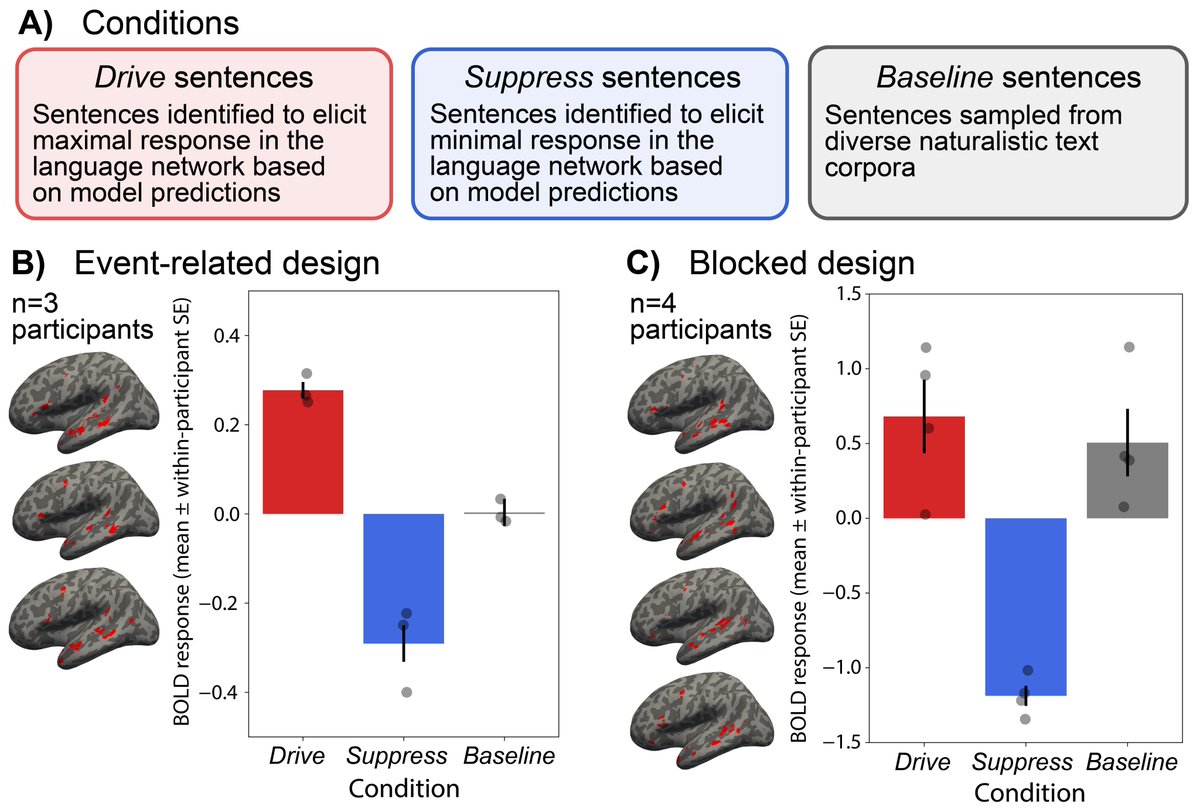

In a new paper lead by Greta Tuckute, we push on this further and test how well model-selected sentences can modulate neural activity. Turns out you can almost double/completely suppress relative to baseline.

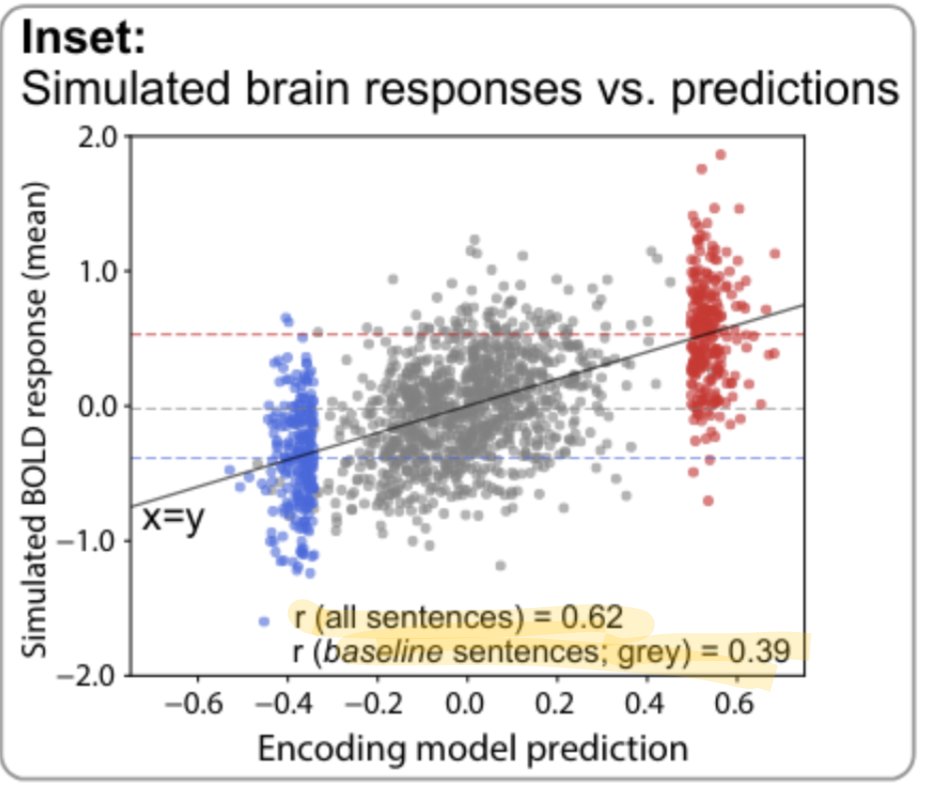

Under reasonable assumptions of inter-subject noise, prediction accuracy of neural activity is ~70% as good as it could possibly be. So even with these edge-case stimuli, gpt2-xl accounts for over 2/3 of the variance in the human language system.